Banana - GPUs For Inference

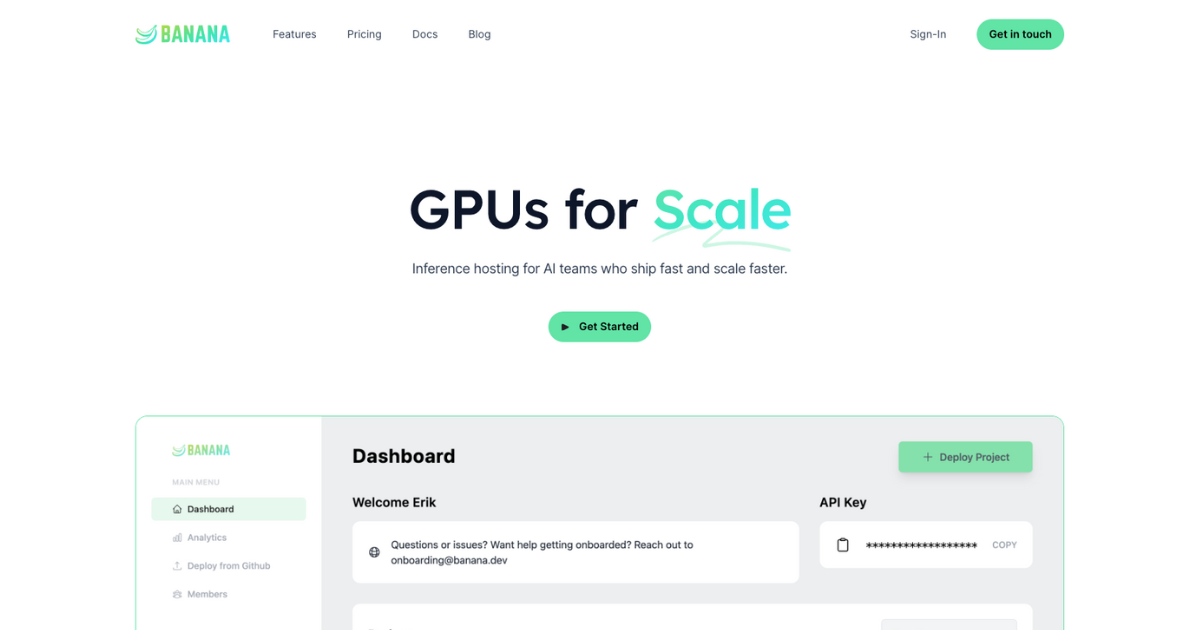

🍌🚀 Level up your AI projects with Banana - GPUs For Inference! 🤖🔥 Scale fast, optimize costs, and gain complete control over your GPU resources. March 31st promises exciting developments! 🌟🔍 #AITool #GPUInference #BananaAI

- Banana Serverless GPUs are being deprecated as of March 31st, with a promise of exciting developments to follow.

- Banana offers autoscaling GPUs for high-throughput inference, optimizing costs and performance.

- The platform provides a full DevOps experience including GitHub integration, CI/CD, tracing, logs, and more.

- Users have complete control over scaling and can easily monitor performance, request traffic, and business analytics in real-time.

- Banana's pricing model features pass-through pricing, without marked-up GPU time costs.

- Customers can extend Banana's capabilities through an open API and customize their environment using favorite libraries.

- For small teams, Banana offers a Team plan with pricing at $1200/mo + at-cost compute, including various features like logging, autoscaling, and business analytics.

- Enterprise customers can benefit from custom plans and features like SAML SSO, automation API, dedicated support, and customizable inference queues.

- For those in San Francisco, there's a unique "Banana Delivery" service where the CEO hand-delivers bananas for $20, known for being rich in potassium.