Giskard - Open-source Solution for AI Quality

🚀 Discover Giskard! 🤖 An open-source AI tool revolutionizing model quality assurance. 🛡️ Detect biases, errors, and security risks effortlessly with just a few lines of code. 🌐 Perfect for data scientists, AI engineers, and compliance enthusiasts. Embrace AI excellence with Giskard! #AIQuality #MLTesting #OpenSource🔍

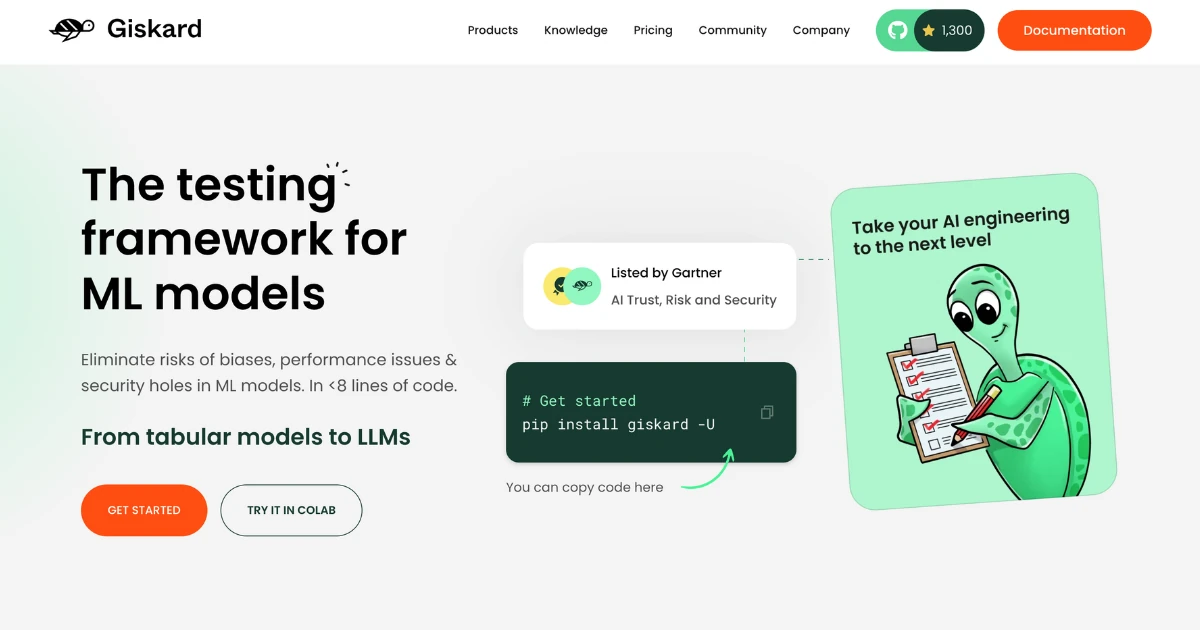

- Giskard is an AI testing framework designed to eliminate biases, performance issues, and security vulnerabilities in AI models with less than 10 lines of code.

- It addresses the shortcomings of manual testing and lack of comprehensive AI risk coverage in MLOps tools.

- Giskard offers a holistic AI Quality framework to ensure compliance with regulations and optimize model deployment.

- The suite includes an ML Testing library for vulnerability detection, AI Quality Hub for collaborative quality assurance, and LLMon for real-time monitoring of LLM-based applications.

- It is beneficial for data scientists, ML engineers, AI governance specialists, and those who prioritize performance, security, and compliance in AI models.

- Giskard facilitates input gathering and collaboration between data scientists and business stakeholders.

- The inclusive community around Giskard focuses on AI Quality and Responsible AI principles.

- The AI Quality Hub enables collaborative AI quality assurance, while LLMon helps diagnose critical AI safety risks in real-time.

- Giskard's LLM Red Teaming service enhances the safety and security of LLM applications through comprehensive threat models.

- Giskard also offers resources like thought leadership articles, red teaming techniques, and guidance on managing data drift in ML models.