LLM evaluation | promptfoo

🚀 Meet promptfoo: The ultimate AI tool for evaluating LLM prompt quality and testing. 📊 It helps refine prompts, measure quality, and compare results effortlessly. With built-in/custom metrics & a user-friendly interface, optimizing LLMs has never been easier! #AI #LLM #promptfoo

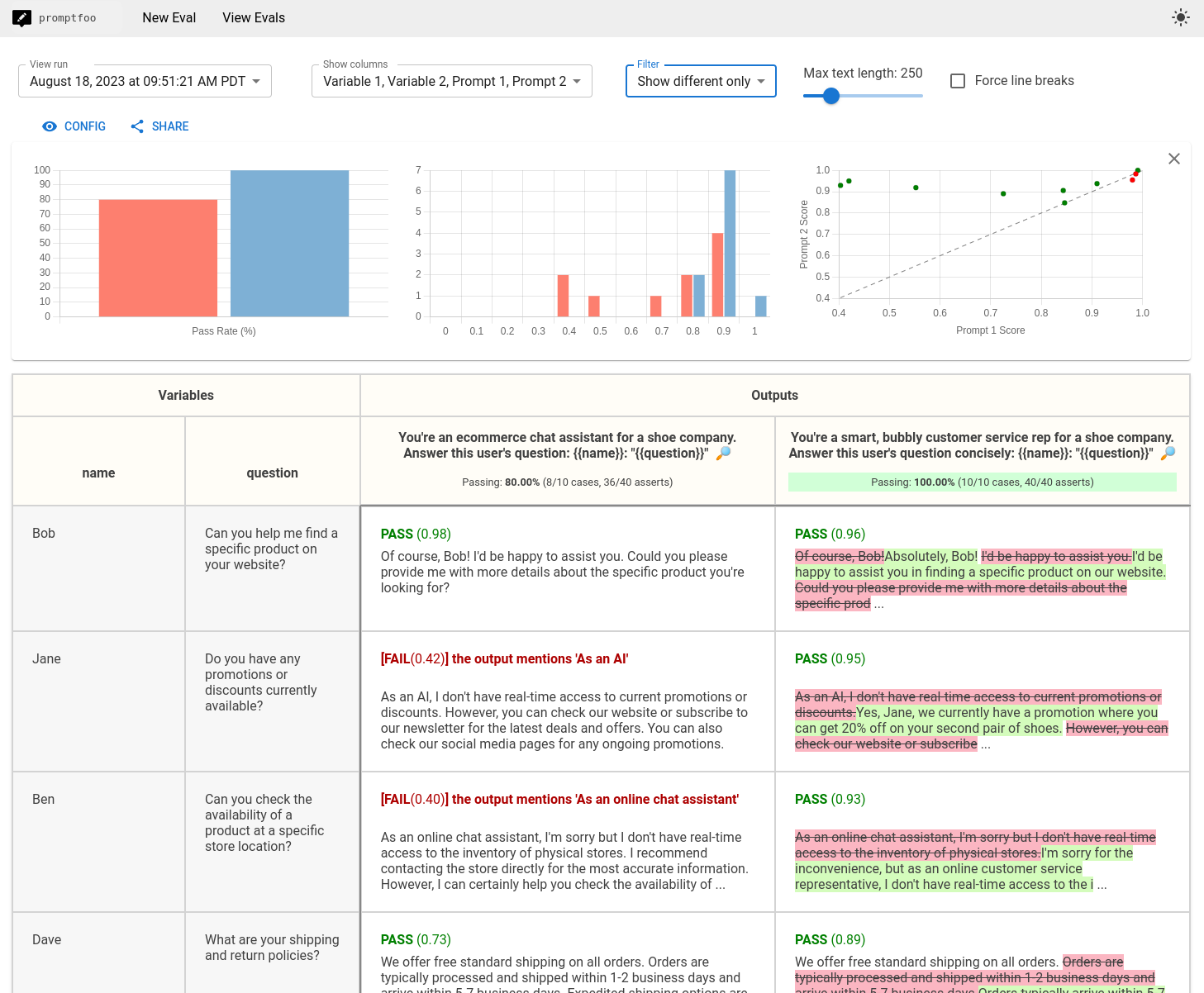

- promptfoo is a tool to iterate on LLMs quickly.

- It helps measure LLM quality and detect regressions.

- Users can create a test dataset with representative inputs to fine-tune prompts objectively.

- Evaluation metrics can be set up using built-in or custom metrics.

- Users can compare prompts and model outputs easily.

- promptfoo is used by LLM apps with over 10 million users.

- It offers a web viewer and command-line interface.

- Documentation, guides, running benchmarks, and evaluations like factuality and RAGs are available.

- Community support is provided through GitHub and Discord.