👾 LM Studio - Discover and run local LLMs

🚀 Dive into the world of local LLMs with LM Studio! 🌟 Experiment with powerful models from Hugging Face offline, with GPU support for enhanced performance. 🤖💻 Perfect for enthusiasts and professionals alike! #AI #LMStudio #HuggingFace #LLMs

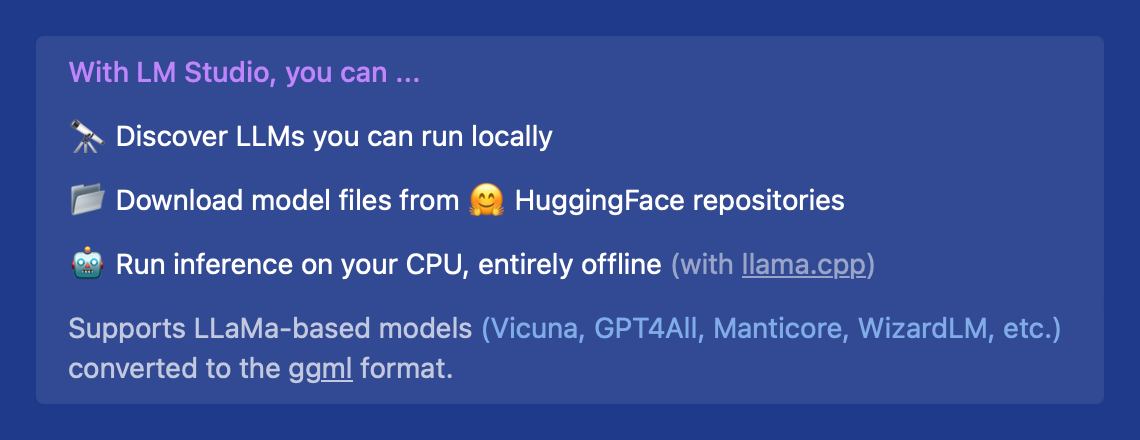

- LM Studio is a tool for running local LLMs like LLaMa Falcon, MPT Gemma, Replit GPT-Neo-X, and gguf ⓘ models from Hugging Face.

- It supports Google DeepMind's Gemma (2B, 7B) starting from version 0.2.16 for Mac (M1/M2/M3), Windows, and Linux.

- New in version 0.2.16 is the Google Gemma support, along with an improved downloader.

- Users can run LLMs offline, use in-app Chat UI, and download models from Hugging Face repositories.

- LM Studio supports various models like Llama, MPT, and StarCoder on Hugging Face.

- Minimum requirements include M1/M2/M3 Mac, Windows PC with AVX2 support, and Linux in beta.

- Privacy is a priority, as LM Studio does not collect data and keeps all information local on the user's machine.

- For business use, there is a separate request form available.

- The team behind LM Studio is hiring, with open positions listed on their website.

- Hardware/software requirements vary based on the system, with specific guidelines for Apple Silicon, Windows, and Linux PCs.

- The tool is free for personal use, and new features are continuously being developed.

- LM Studio is based in Brooklyn, New York, and welcomes inquiries about using the tool in a professional setting.